Summarized version:

- Purchase the following:

- Mellanox ConnectX-3 10Gbe SFP card from eBay (~$24-$29 at time of writing). Make sure the length is x4; x8 is too long.

- M.2 NGFF to PCI-E x4 Adapter (~$10 for 2pack; Get the white one; The green ones are generic poop).

PCI-E x4 extension ribbon cable from eBay ($5.68)I have since modified my setup to NOT use this ribbon cable. It proved unreliable at best. The one above is gone, but Newegg still has one for sale.- Wall power adapter (12v) to molex adapter.

- There are several ways to accomplish this purchase; I needed 3, so I opted for the 5 pack of barrel connectors (~$13), and a couple barrel to molex adapters (~$6). This cuts down on space used (no mid-bricks on the floor).

- Download and print “Thicc Skull” from thingiverse on 3D printer.

- Total spent on single skull canyon setup (including the Mellanox card): $43.68

- The 3D printed case is??technically optional if you want to get tricky about it.

- Test. Enjoy.

This is nuts — but it works; Our micro homelabs are about to change forever.

We have an Intel Skull Canyon NUC ESXI cluster running 6.7u1. Each is filled to the gills with 32GB of ddr4 sodimm ram (and recently was discovered to run with 64GB!). VMware runs like a champion, fully supporting the 1G nic, plugged into our 1G switch. All VMs live on an all-flash storage being served up via iSCSI datastores… via that same 1G nic.

BAH with 1G I say!??Homelab is all about experimentation, and I want??faster, but not??hungrier (read: low TDP).

Enter the Skull Canyon PCIE x4 Expansion Ring, or *drumroll* — Thicc Skull.

Thicc Skull allows for any low-profile sub-4″ length PCI-E x4/x1 card to be added to the Skull Canyon via a custom, hand-crafted-in-blender, 3D printable expansion ring. *gasps for air*

83 hours of modeling and test printing. over 400grams of filament blown on making sure measurements were as even as possible, while still having enough flex to fit multiple situations.

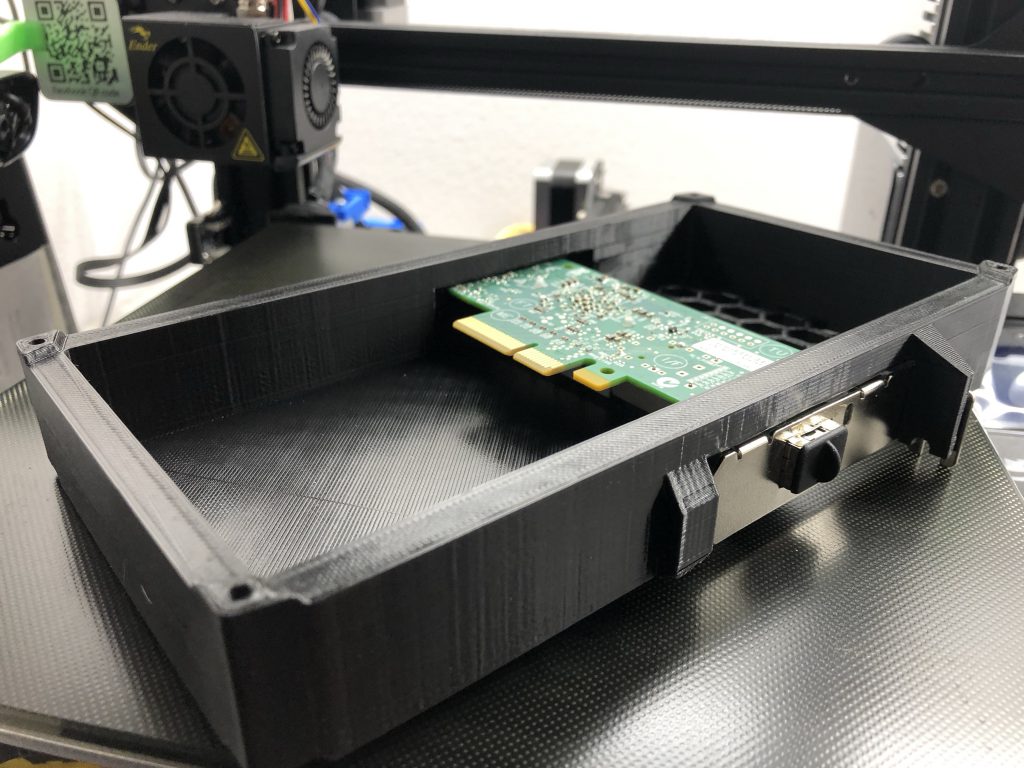

Prior to installing the NUC itself, you’ll install the card, (which in our case was the delightful Mellanox ConnectX-3 10Gbe card) which gets inserted from the??outside. Finagle your way in, being careful not to knock the “stabilizer” fin off.

There’s a nice little shelf at the back that the card can rest on. If you have a particularly tall heatsink, a landing-spacer is also available for you to print, included in the .STL download available on thingiverse; This allows the card to sit a hair higher so the heatsinks don’t touch the PLA.??Please don’t let your card’s heatsinks rest on the bottom of the 3D print – it will melt.

On the inside, fully hooked up, you’ll see something like the following above. I’ve opted to install ESXI 6.7u1 onto an Adata M.2 SSD in the first M.2 slot. The second slot contains the all-magical M.2 riser card, plugged into the 10Gbe NIC card via x4 extension cable. (NOTE: As a precaution (not pictured), I wrapped the x4 extension cable in electrical tape near the DDR4 Sodimm RAM, as it does make direct contact with those chips).

This is more cosmetic / ease of use than anything, but I ended up getting the Molex attached to some barrel-style power adapters (the ultra common 5.5mm x 2.1mm size) and some wall-wart power adapters (12v / 1A); The Mellanox ConnectX-3 sips power at around 500-600 milliamps, so 1A is plenty.

The front is basically unchanged. Maybe I’ll embed some letters on the front that say Thicc Skull or something.

I’ve printed the final version in a few colors:

??

Please feel free to comment and show off your own creations!

??

Screw you expensive Thunderbolt 3 adapters.

Enjoy the speed.

Like a true geek, love it.

Pingback: New Thunderbolt 3 to 10GbE options for ESXi

Does this work with Vmware?

Yes, absolutely does. (Sorry for the late reply)

did you use the screwholes in the printout to secure the casing?

I haven’t gotten around to finding the correct length of screw for this, but the holes are there for this purpose!

m2 screws x 20mm just fit to hold it in place I wouldnt put any preasure but seems to fit nicely. having dropout issues with 1 nuc but the rest seem good

Here we are, July 2020. I’ve continued to use this setup over the last year, and I ended up having to get rid of the PCIE ribbon cable. There simply isn’t a high-quality one that I can procure easily. They are junk.

I junked the cases, and now run them open-case, with the 10Gbe card plugged directly into the white x4 m.2 adapter.

Great work, do you know if the ring is big enough to hold a 3.5″ drive? Thanks

This ring isn’t, but one could create a slip-in case for one. The only annoying thing would be powering the device… and hooking it up to an M.2 2280 SATA header adapter. Don’t get me thinking about this! D:

This is awesome! got everything but the NICs and printing them left to do.

I would have loved to see this mounted the opposite direction, with the ports on the side in order to support the mcx312a-xcbt for a dual 10Gb NIC (longer card). Mounts for a fan to exhaust through the honeycomb at the bottom would be awesome too!

I wish I could manipulate the drawing, but I am not that talented. 🙂

Hi

I have tried the same and as soon as I use a PCIe x4 extenstion cable the network adapter is not recognized anymore from VMWARE ESXi. In the Bios I can see the network card. any suggestions?

My extension cable looks pretty the same way and is about 19cm long. So it shouldn’t be a problem.

Here we are, July 2020. I’ve continued to use this setup over the last year, and I ended up having to get rid of the PCIE ribbon cable. There simply isn’t a high-quality one that I can procure easily. They are junk.

I junked the cases, and now run them open-case, with the 10Gbe card plugged directly into the white x4 m.2 adapter.

Nice could you please share new pictures of your present setup?

Hi

Just tried with the same exact components on my intel nuc skullcanyon. Tried another set of green adapter also but I cant get the nuc to detect the pci cards. Its only detecting nvme/sata drives. Any other setting I have to change with the latest Bios, tried Bios version 67 and 63.

Tried without a pci cable with direct connection to board also, cards tried are Intel Quad port gigabit VT/1000, Intel X540 10Gb Nic etc. These cards work on other machines I have on direct PCI slot.

Did you solve your issue?

> I have since modified my setup to NOT use this ribbon cable.

are you using a different riser cable? or something different?

No I’m just using molex adapter and the white adapter (https://www.amazon.com/gp/product/B074Z5YKXJ/ref=ppx_yo_dt_b_asin_title_o00_s00?ie=UTF8&psc=1). Also the same adapter is working when I put it on my Asus motherboard for AMD Ryzen 5 3600. Only in NUC its not detecting. Are you on latest BIOS?

Thanks

Solved this issue. My problem was bad 12 v supply. Once I switched to new 12 v 3A power supply cards are working. Also I used this extender https://www.amazon.com/gp/product/B07Y46WTD1/ref=ppx_yo_dt_b_asin_title_o01_s00?ie=UTF8&th=1

I tried another adapter from adt and the same troubles.

bios detect networkcard but not esxi 6.7.

I am on the latest bios.

Just in the riser card my networkcard is recognized by esxi 6.7.

There must be something simple:

Maybe Voltage or a bios option which hinders the operatingsystem to recognize the card when the cables are longer

Did you have the 12v power connected? The ADT cable i tried worked fine and has been medium term reliable.

yes I had 12 V connected

Hi guys, I am going to try this.

I’m trying to get all the bits chosen and sent to Australia.

Will a Intel based X540-T2 card work ok?

Also, can I draw power from the SATA header on the board? or is there an easier solution?

I like the idea, could this work using a portable external version that can connect to any thunderbolt port? Like a big version of the Sonnet?

Use an M.2 enclosure with Thunderbolt cable, installed in box with Riser. PCI-E, Ribbon cables and Power.

I know this is an old post and things might not have lined up cost wise for this back in 2019, but I wonder if you ever thought of using thunderbolt in its networking bridge mode? One 4 port thunderbolt hub and direct connect the boxes. Best of all worlds… 10Gbit link speed, full duplex, OWC currently sells a suitable hub for $150… not sure if something like it was available back then to not. It’s a shame that the Skull Canyon boxes didn’t come with 2 thunderbolt ports like the newer NUCs do, so you could just daisy chain them.

AH… but I just looked up and ESXI doesn’t support thunderbolt networking. Windows, Linux and Mac do, but VMware doesn’t. Oh well!

Thanks of rthe article. It is fascinating even in 2024